Generative AI is transforming industries by enabling machines to create content, code, and even art autonomously. For developers and interested in harnessing the power of this technology, understanding the Generative AI tech stack is crucial. This article explores the components and technologies involved in building a robust generative AI system, offering a comprehensive guide from A to Z.

Understanding Generative AI

Generative AI refers to algorithms that can produce new content—text, images, music, or code—based on training data. Unlike traditional AI systems that focus on recognition and analysis, generative models create something entirely new. This capability is underpinned by advanced machine learning models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

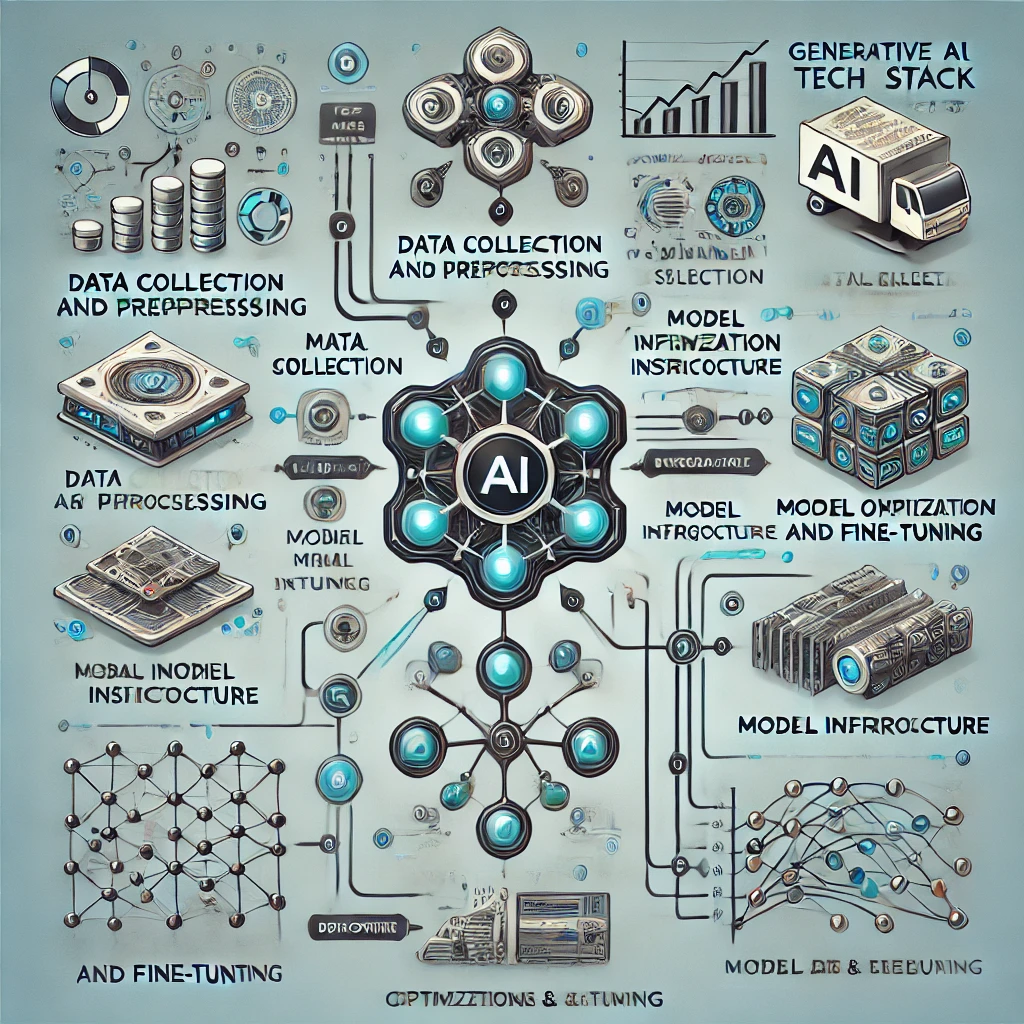

Key Components of the Generative AI Tech Stack

1. Data Collection and Preprocessing

The foundation of any generative AI system is data. High-quality, diverse datasets are crucial for training models to generate meaningful content. This process involves:

- Data Sourcing: Gathering large volumes of data from various sources like text corpora, image libraries, or audio samples.

- Data Cleaning: Removing noise, inconsistencies, and irrelevant information to ensure the data’s quality.

- Data Augmentation: Expanding the dataset by introducing variations, such as rotating images or paraphrasing sentences.

2. Model Selection

Choosing the right model is critical for the success of a generative AI project. Popular models in the generative AI tech stack include:

- Generative Adversarial Networks (GANs): GANs consist of two neural networks—a generator and a discriminator—that work against each other to create realistic data. GANs are widely used in image generation, video synthesis, and even text-to-image tasks.

- Variational Autoencoders (VAEs): VAEs are used for tasks that require continuous data generation, like producing variations of an image or generating human-like faces.

- Transformers: Models like GPT (Generative Pretrained Transformer) are revolutionizing text generation, enabling AI to write coherent articles, code, and even poetry.

3. Training Infrastructure

Training generative AI models requires significant computational power. Key elements of the infrastructure include:

- GPUs and TPUs: Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) are essential for handling the massive parallel computations needed for training deep learning models.

- Distributed Computing: Leveraging multiple machines and cloud computing services like AWS or Google Cloud to train models faster and more efficiently.

- Frameworks: Using deep learning frameworks like TensorFlow, PyTorch, or JAX to build and train models. These frameworks provide tools and libraries that simplify the development process.

4. Model Optimization and Fine-Tuning

After training, models often require fine-tuning to improve their performance on specific tasks. This involves:

- Hyperparameter Tuning: Adjusting parameters like learning rates, batch sizes, and epochs to optimize the model’s accuracy.

- Transfer Learning: Using a pre-trained model on a related task and fine-tuning it on a specific dataset, saving time and resources.

- Regularization Techniques: Implementing methods like dropout or weight decay to prevent overfitting and ensure the model generalizes well to new data.

Deployment and Integration

Once a generative AI model is trained and optimized, the next step is deployment. This phase involves:

- Model Serving: Using platforms like TensorFlow Serving or NVIDIA Triton to deploy models in production environments.

- API Integration: Exposing the model’s capabilities via APIs, allowing other applications to interact with the AI system.

- Monitoring and Maintenance: Continuously monitoring the model’s performance, retraining it with new data, and maintaining the system to ensure it remains effective over time.

Applications of the Generative AI Tech Stack

The potential applications of generative AI are vast and varied. Some notable use cases include:

- Content Creation: AI-generated text, music, and art are becoming increasingly popular, enabling creators to push the boundaries of creativity.

- Code Generation: Tools like GitHub Copilot use generative AI to assist developers by generating code snippets, reducing development time.

- Healthcare: Generative AI is used to create synthetic medical data for training models, designing new drugs, and even generating personalized treatment plans.

Challenges and Considerations

While generative AI offers immense potential, it also presents challenges:

- Ethical Concerns: The ability to generate realistic content can be misused, leading to concerns about deepfakes, misinformation, and the ethical implications of AI-generated content.

- Bias in AI: Generative models can perpetuate biases present in the training data, leading to biased outcomes. It’s crucial to implement fairness and bias mitigation techniques.

- Resource Intensive: The training and deployment of generative AI models require significant computational resources, making it expensive for smaller organizations to implement.

Conclusion: The Future of Generative AI

The Generative AI tech stack is evolving rapidly, with advancements in model architecture, training techniques, and deployment strategies. As technology progresses, generative AI will continue to unlock new possibilities across various industries. For organizations looking to leverage this technology, understanding and investing in the right tech stack is key to success.